Workshops: 📈 Ethical Data Project, 🛠️ Practical Tools for Responsible AI, 🖋️ Critical Feminist Intervention in Trustworthy AI and Tech Policy

Mozilla Festival House (MozFest), Amsterdam 2023

Intro

*Scroll directly to Workshops to learn about the content and tools*

Since I’ve just recently entered the space of software development, artificial intelligence and data, I thought at first that attending this conference was premature and that I should just focus on all of my project reading material for the engineering doctorate and coding for the prototyping. On the other hand, scrolling through the conference schedule, I realized the topics planned for the workshops were ones that a project team should dive into from the concept phase of development, and after nearly a month in the doctoral program, I haven’t started exploring them.

So, I paid for my ticket and decided to go, and am extremely happy I did! The sessions were really hands-on, I could meet and discuss with inspiring experts from very diverse fields and backgrounds, and took home practical tools for myself and my team.

Below I will share some learnings and useful tools from the three events I attended. The titles are linked directly to the schedule information for the full description of the workshop.

Disclaimer: This hub is meant for AI for healthcare, but as mentioned in other sections, everything that regards safety, ethics, and responsibility is applicable to healthcare, as well as other fields. This conference did not focus on the healthcare sphere explicitly, but the learnings are still valuable and transferable.

About MozFest 2023 and Mozilla Foundation

This year, your part in the story is critical to our community’s mission: a better, healthier internet and more Trustworthy AI”. (MozFest)

“The Mozilla Foundation (stylized as moz://a) is an American non-profit organization that exists to support and collectively lead the open source Mozilla project”. (Wikipedia)

Workshops

📈 Make Your Data Project Ethical in Twelve Steps

My first workshop of the day and a great start. It was facilitated by Rijk Mercuur, Ph.D., an impressive professional in the Responsible AI space who is currently a researcher at Hiro. He has worked in research and taught artificial intelligence and ethics at Utrecht University until last year.

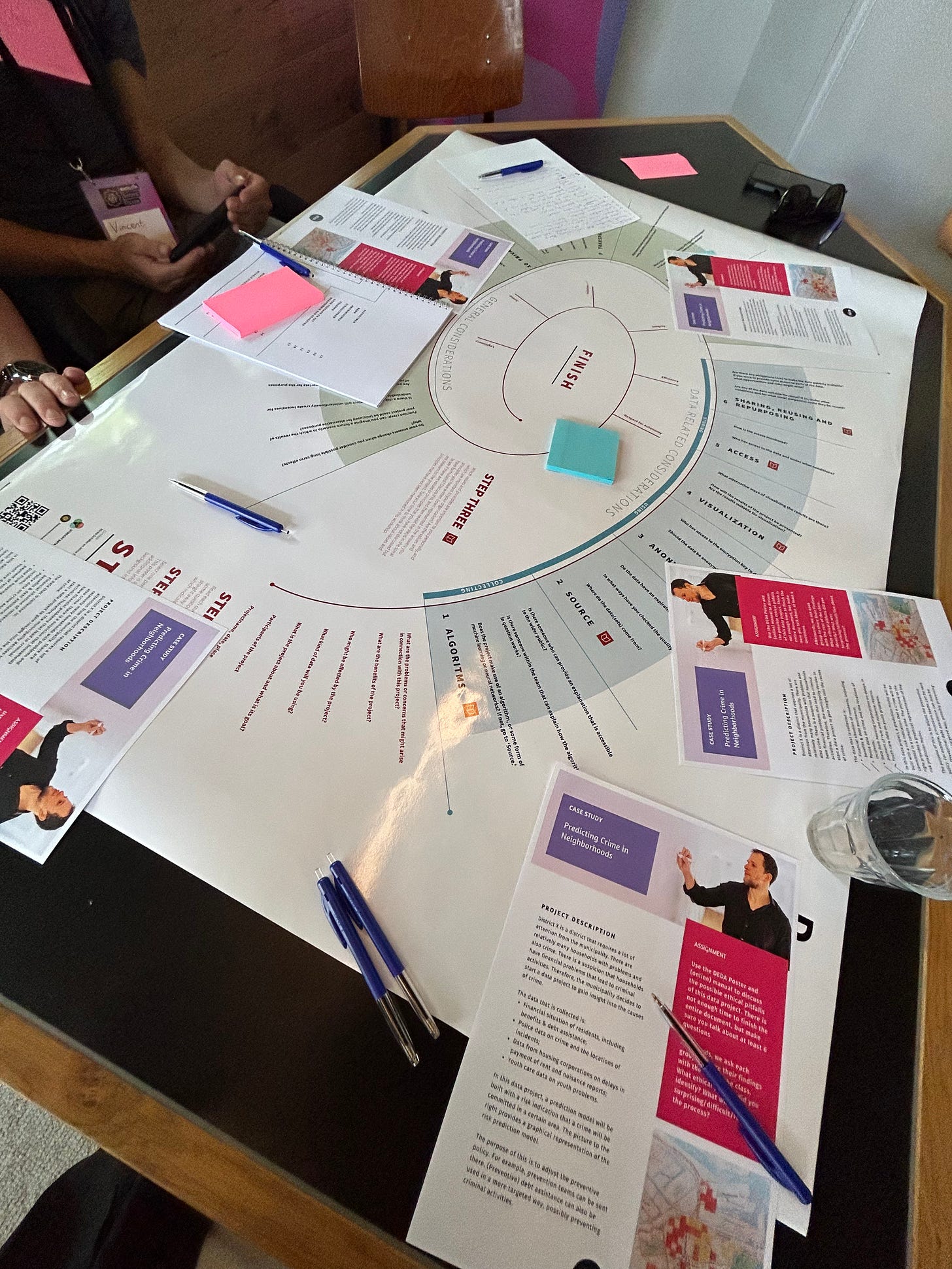

I chose this workshop as the description was very sales-pitchy, which is key when you work in industry. The tool he presented is the Data Ethics Decision Aid (DEDA), which consists of 12 steps to go through with multidisciplinary teams of data analysts, project managers and policymakers to recognize ethical issues in data projects, data management and data policies.

We were provided a case study and discussed in breakout and plenary sessions some of the reflections from the exercise, but we didn’t manage to go through the whole worksheet due to time constraints. It was enough for us to get the gist of it and recognize how important it is to align and think of these topics in professionally diverse groups as many different perspectives and reflections come up.

🛠️ Practical tools for responsible tech & AI

The following session was by an equally impressive professional, Jesse McCrosky, ThoughtWorks’ Head of Sustainability and Social Change for Finland and a Principal Data Scientist with 10+ years of experience in academia, government, industry, and consulting. The workshop was facilitated by Lisa van de Merwe a Senior UX Consultant at ThoughtWorks.

Jesse talked about AI transparency and Thoughtworks’ AI Design Alignment Analysis Framework, which he authored and you can download for free. The framework focuses on aligning the AI system with social responsibility by analyzing it through the lenses of technical function, communicated function, and perceived function. They are also currently working on a Responsible AI ebook. Lisa went on to present more tools, such as the Tarot Cards of Tech (I am obsessed 🤩) which can help a project team think through the impact of technology before and during its development. The final tool presented and applied during the hands-on part of the session was the Ethical Explorer. The idea behind these cards is that they can help you identify some potential risk zones for the technology being developed and provide some questions to brainstorm solutions and ethical principles.

The next step after identifying the principles is to reject, rephrase or accept them, depending on the many variables the project might have (project team, business needs, resources, etc.). This was an interesting point because, as the facilitators mentioned, we tend to focus on the outputs rather than reflecting on the impacts. A company may decide to go a “less responsible“ way but at the very least should allocate some time to reflect and reject.

🖋️ Critical Feminist Interventions in Trustworthy AI and Technology Policy

Last but not least the very final session of the day (kudos to her because it must have been intense!) was given by another impressive ML engineer, researcher, inventor, and organizer, Bogdana Rakova from the Mozilla Foundation. I also recommend following her on LinkedIn as I noticed many interesting featured links to her work.

We ended up mostly having an interactive plenary discussion on the framework presented as opposed to a hands-on workshop with an example since we all engaged in sharing thoughts, and there were some time constraints.

The framework is called Terms-we-Serve-with (TwSw) and it is “a socio-technical framework meant to enable diverse stakeholders to foster transparency, accountability, and engagement in AI, empowering individuals and communities in navigating cases of algorithmic harms and injustice”. The idea behind it is that the current consent-to-terms agreements can be misleading and leave individuals powerless in cases of algorithmic harm. This framework supports defining AI system and data strategy, human-centered user agreements, user experience research, an ontology of user-perceived AI failure modes, contestability mechanisms that empower continuous AI monitoring, and mechanisms that enable the mediation of potential algorithmic harms, risks, and functionality failures when they emerge.

🥳 I hope you enjoyed this piece and learning about these tools!

Do you think these can be useful for your project and/or team?

What are your thoughts on the implementation of these sessions in your daily work?

Do you have more recommendations to share?

💬 It would be great to read some of your inputs and insights. You can use the comments section or the Chat section I’ve just enabled for the AI Health Hub community.

Love this piece Vic!